Review – SSD Memblaze PBlaze7 7940 7.68TB – One of the first Gen5 SSD for Datacenters!

Today, we will test the Memblaze Datacenter NVMe SSD, the PBlaze7 7940 model. This top-of-the-line product has been designed for the high-performance enterprise market, and we will soon see how it performs.

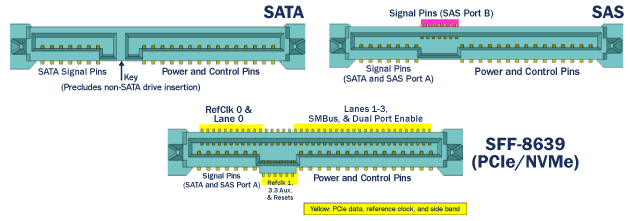

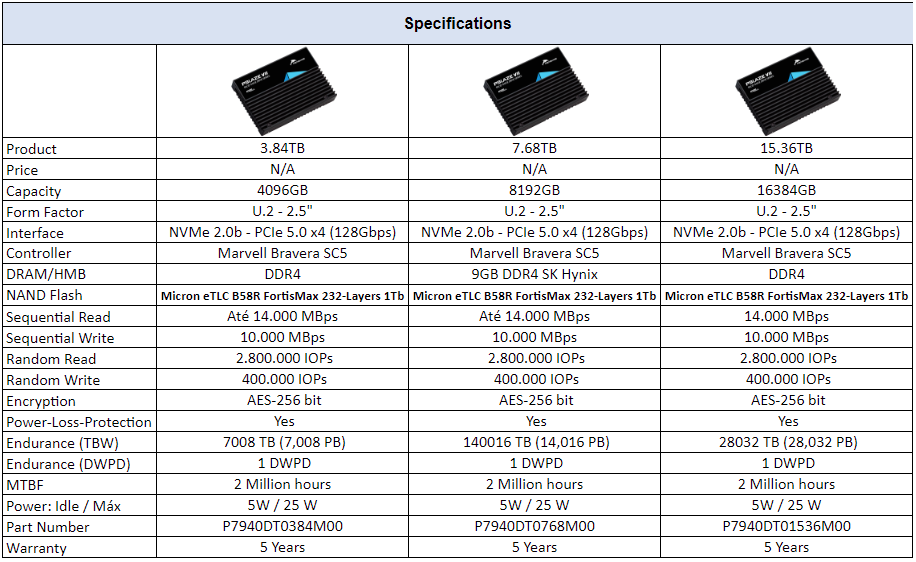

It comes in the distinctive U.2 format, which more closely resembles the well-known and older 2.5″ SATA connector. However, it features a 128Gbps interface, meaning 4 PCIe 5.0 lanes, NVMe 2.0 protocol, and capacities ranging from 3.84TB to 15.36TB. Since it’s a very recent product, we don’t have a price (MSRP) for this SSD yet.

“It’s worth noting that Memblaze offers this SSD lineup in multiple formats to suit the requirements of data centers and servers. While we received the U.2 format SSD, they also offer it in HHHL AIC, 2.5” U.2, E1.S, and E3.S formats.

Both connectors, SATA and U.2, have different pin-outs. SATA employs two connectors, whereas U.2 uses only one. Moreover, the SATA connector relies on the AHCI protocol for host communication, whereas this SSD utilizes the NVMe 1.2 protocol with a PCIe 5.0 x4 interface for host communication.

It comes with a default 14.5% over-provisioning allocation but as we’ll see in the review, there are other versions as well (PBlaze7 7946).

Specifications MEMBLAZE PBlaze7 7940 7.68TB

Here are some more detailed specifications for the SSD being tested (7.68TB unit):

SSD Software

Unfortunately, We couldn’t find any Memblaze software. Although it is possible to realize firmware updates through NVMe-CLI.

Unboxing

Given that this is a data center SSD, the packaging is quite straightforward. It arrived in a plain brown cardboard box. Upon unboxing, we discovered the SSD securely held in a foam holder to prevent any damage during transportation.

We can see that it comes with an anti-static plastic to prevent any damage as well.

On the front, the SSD features a simple white label displaying the product’s name, model, serial number, and part number. The back of the SSD showcases an elegant all-black design with a rounded shape in the upper right corner, indicating the presence of an electrolytic capacitor for the Power-Loss-Protection system.

We can see that on its side, it also has a Debugging connector, which allows data collection through UART and JTAG.

We will now proceed to open the SSD. As seen in the image below, it is secured by 4 star-type screws, also known as Torx T6 screws.

Upon removing the screws and the upper casing, we can see the build quality and attention to detail of the SSD. Its rear casing has a metal plate that aids in heat dissipation, and the entire rear PCB is almost entirely covered by 1mm thermal pads.

To open the remaining part of the SSD from the upper-casing, we need to remove four more Torx-T6 screws that secure the PCB.

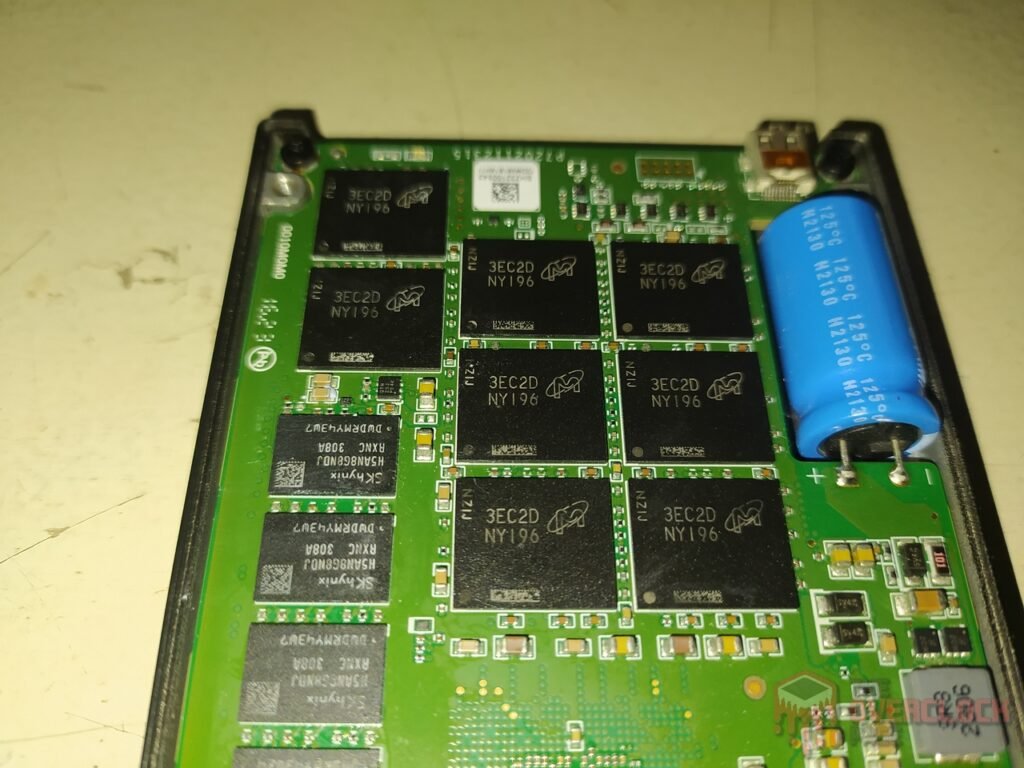

On the front PCB, you’ll find several chips, including the controller, 8 NAND Flashs, 4 DRAM Cache modules, and various VRM and P.L.P. ICs.

On its back PCB, we can see an additional 8 NAND Flash modules and 5 DRAM Cache modules.

Controller

The SSD’s controller is responsible for all data management, over-provisioning, garbage collection, among other background functions. And, of course, this contributes to the SSD’s good performance.

Datacenter SSDs typically use different controllers than those found in consumer market SSDs. In this case, we won’t find the familiar Phison E26 controller, which is currently the only Gen 5.0 controller available in consumer SSDs. Silicon Motion also has its SM2508 controller, but no products featuring it have been released yet.

Leaving that aside, in this SSD, Memblaze uses a controller from the manufacturer Marvell, nicknamed the Bravera SC5 MV-SS1333.

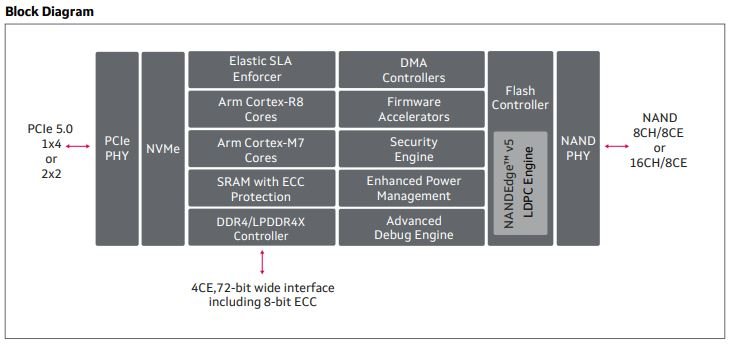

Being a Datacenter controller, it includes features not commonly found in consumer SSD controllers. The controller used in this SSD is the MV-SS1333, which is part of a lineup with two variants: the MV-SS1331 with 8 communication channels, and the MV-SS1333 with 16 channels.

This controller features a 32-bit ARM architecture with 10 cores + 1 core (decacore + Singlecore) for processing. Among these cores, there are Arm® Cortex®-R8 primary cores to provide the best performance in NAND Flash management, along with several Arm® Cortex®-M7 cores to offer additional flexibility in terms of efficiency. These M7 cores are the same as those found in the TenaFe TC2200 controller we encountered in our review of the Netac NV5000-T. Additionally, it includes an Arm® Cortex®-M3 core with integrated SRAM for instructions and data, as well as cryptographic engines (AES, SHA, RSA, ECC) within a secure boundary responsible for handling security standards (TCG) for controlling all secure units and key management.

It also supports DDR4-3200 MT/s and LPDDR4-4266 MT/s DRAM Cache with a 72-bit DDR interface (64 data bits + 8 ECC bits).

Its 16 communication channels have an NV-DDR4 bus running at 1600 MT/s (800 MHz), which is not as fast as the Phison E26 controller, as this Marvell controller has been in the market longer than Phison. However, one of its main differentiators is that it supports interleaving of up to 8 Chip Enables per channel, allowing this controller to communicate directly with up to 128 dies. This is not common in consumer market SSD controllers.

Most consumer-grade top-tier controllers like the Phison E18 and Phison E26 typically support up to 32 dies (8 channels and 4 CE per channel), while this Marvell controller offers 16 channels and 8 CE per channel.

DRAM Cache or H.M.B.

High-performance top-tier SSDs require a buffer to store mapping tables, such as the Flash Translation Layer or Look-up table. This buffer enhances random performance and responsiveness.

Datacenter SSDs heavily utilize DRAM Cache for mapping tables, although they don’t always follow the common 1:1000 pattern seen in consumer SSDs, where 1GB of DRAM Cache is typically provided per 1TB.

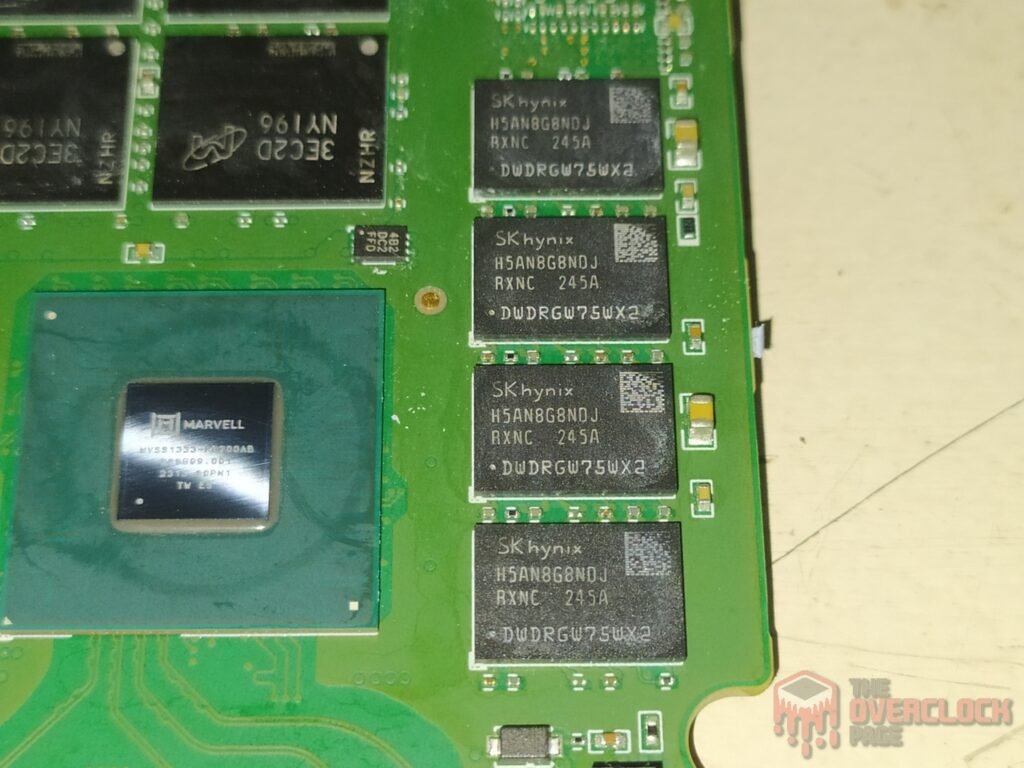

Near the controller on the front PCB, you’ll find 4 DRAM Cache modules manufactured by SK Hynix (model H5AN8G8NDJR-XNC). These modules are DDR4-3200 MT/s with a density of 8Gb (1GB) each and operate with CAS-22 latency. In total, there is 4GB of DRAM Cache on the front PCB.

On its back PCB, you can see that the SSD features 5 more modules from SK Hynix, of the same model, H5AN8G8NDJR-XNC, resulting in a total of 5GB DDR4.

Overall, this SSD has 9GB of DDR4-3200 MT/s CL-22 DRAM Cache.

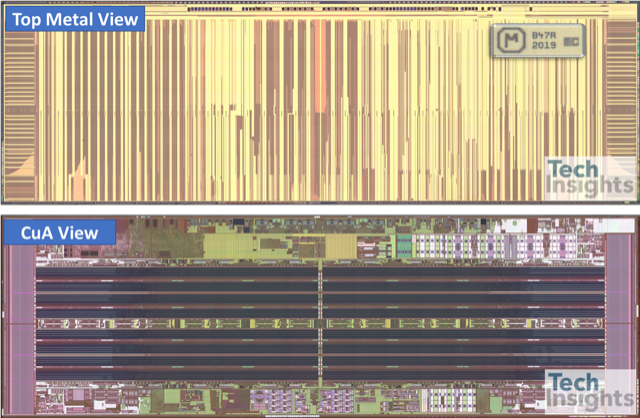

NAND Flash

Regarding its storage integrated circuits, the 7.68TB SSD features 16 NAND flash chips marked as “NY196.” When using the manufacturer’s decoder, it’s revealed that these NANDs are “MT29F4T08EMLCHD4-T:C” from the American manufacturer Micron, specifically the B58R FortisMax model. In this case, they are 1Tb dies (128GB) with 232 layers of data and a total of 253 gates, resulting in an array efficiency of 91.7%. Out of the 253 layers in the SSD, 232 are allocated for storage, contributing to this efficiency.

The B58R dies are of the eTLC (Enterprise Triple-Level Cell) type, but what are eTLC and how do they differ from traditional TLC chips?

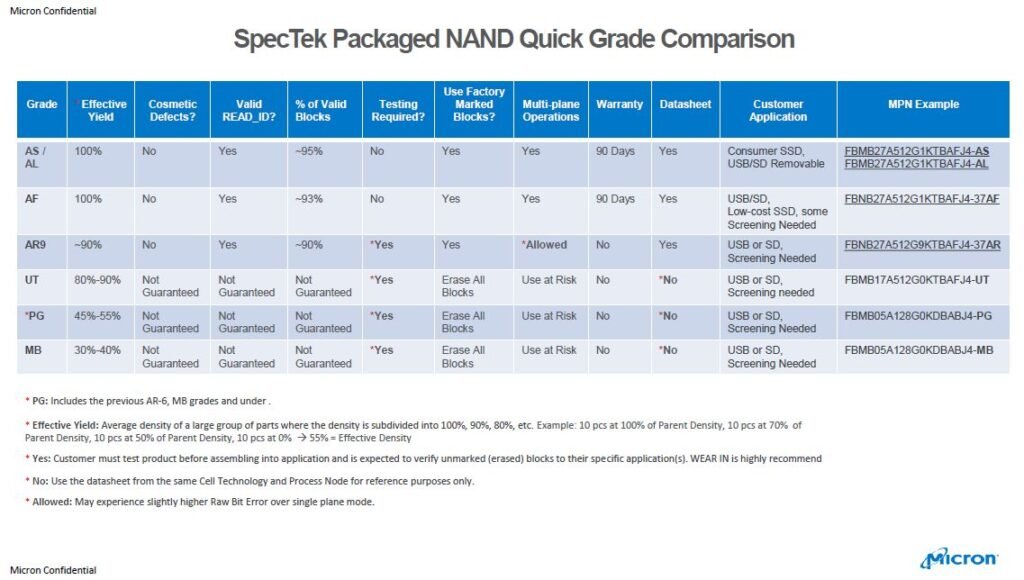

It’s important to note that NAND Flash chips come in various durability categories, with different manufacturers categorizing their wafers accordingly. This SSD uses Micron’s FortisMax chips, which offer greater endurance than those in consumer SSDs. However, they are more expensive due to their selection from higher-quality wafers.

We have the FortisFlash, which are the most famous and commonly used in consumer SSDs. We have the MediaGrade, which is typically seen in QLC dies and tends to have slightly lower durability compared to FortisFlash.

And finally, we have SpecTek. Spectek is a subsidiary of Micron that uses DRAM chips and NANDs that sometimes don’t go through all of Micron’s endurance and quality control tests and are resold under the SpecTek brand as more affordable options. They tend to have lower durability.

However, these SpecTek dies also have durability classifications and recommended use, as shown in the image below.

In this SSD, each NAND Flash contains 4 dies with 1Tb density, totaling 512GB per NAND, resulting in 8TB due to the 16 NANDs. They communicate with the controller through their 1600 MT/s bus, which unfortunately is well below the 2400 MT/s they are capable of delivering.

We can see that consumer SSDs that offer speeds in the range of 10,000 MB/s for sequential read and write also operate in this performance range, with NANDs operating at 1600 MT/s. This isn’t due to physical limitations but rather concerns about power consumption and thermal dissipation in M.2 SSDs, something that isn’t as limiting in U.2 SSDs. However, it’s worth noting that M.2 SSDs with speeds of 12,000 MB/s already use these NANDs at 2000 MT/s, and this new generation of Gen 5 SSDs with 14,000 MB/s will fully harness the potential of NANDs at 2400 MT/s.

Each of these dies has 6 planes so that when the controller accesses each die, it can increase parallelism and, thereby, performance. It’s important to highlight that this represents a significant boost in performance compared to Micron’s previous models with 176 layers.

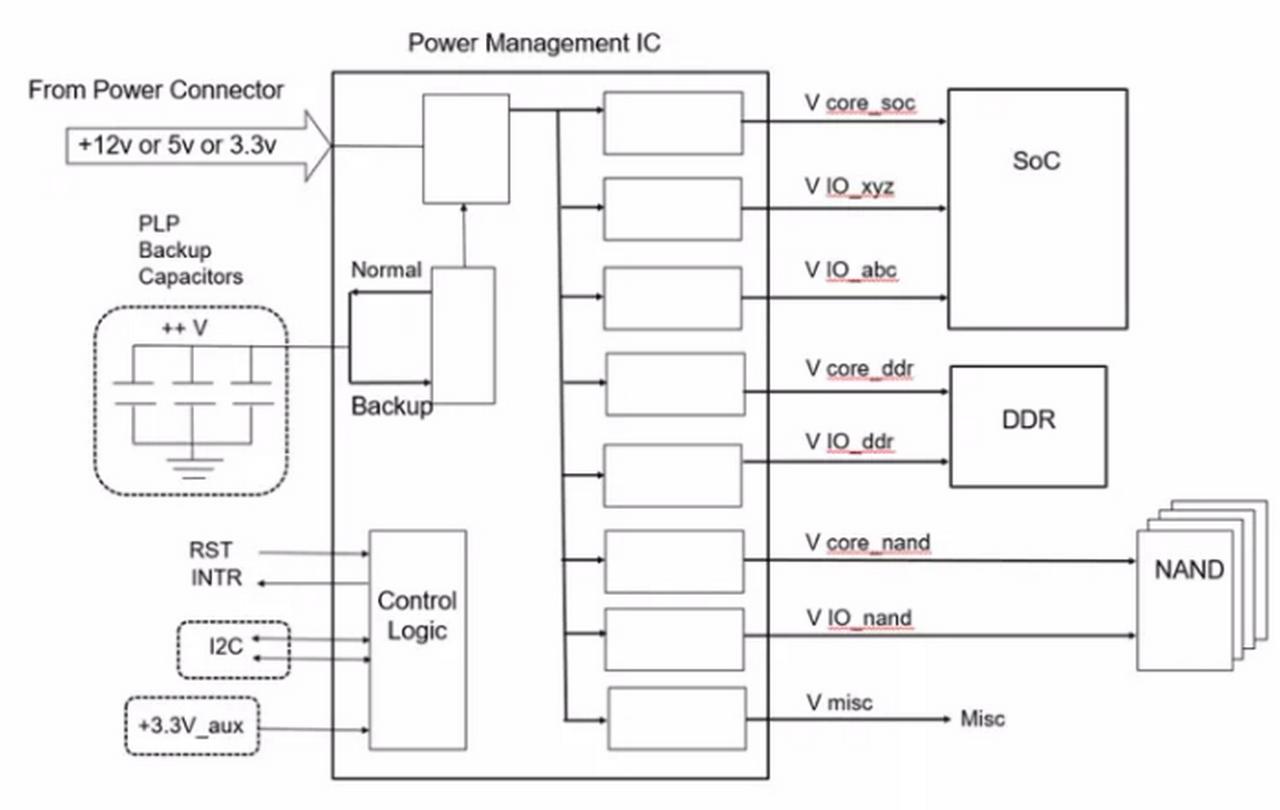

PMIC (Power Delivery)

Like any electronic component that performs work, SSDs also have a power consumption level that can range from a few milliwatts to nearly 25 watts, close to the limits of some connectors or slots. The circuit responsible for all power management is the PMIC, which stands for “Power Management IC,” a chip responsible for supplying power to other components.

We can see that the main IC of this SSD is a PMIC from Integrated Device Technology (IDT), model IDT P8330-5M2, which is a subsidiary of Renesas. It’s clear that this IC is a single or multichannel SSD Power Management Solution.

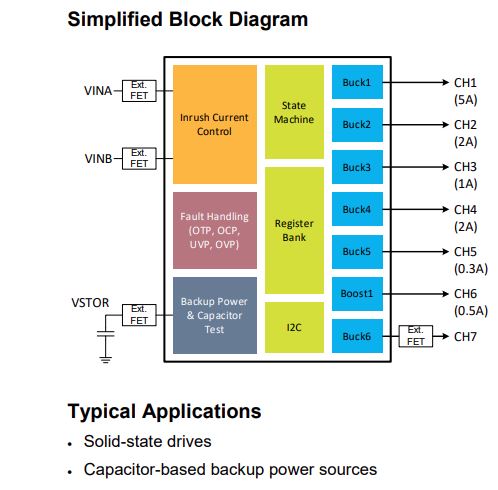

As we can see in the block diagram above, it can handle up to 6 step-down regulators with internal MOSFETs, with each one having a current level and voltage in each channel. It’s specifically designed to work in SSDs, and it offers various protection systems such as overcurrent, overvoltage, undervoltage, undercurrent, overtemperature, among others.

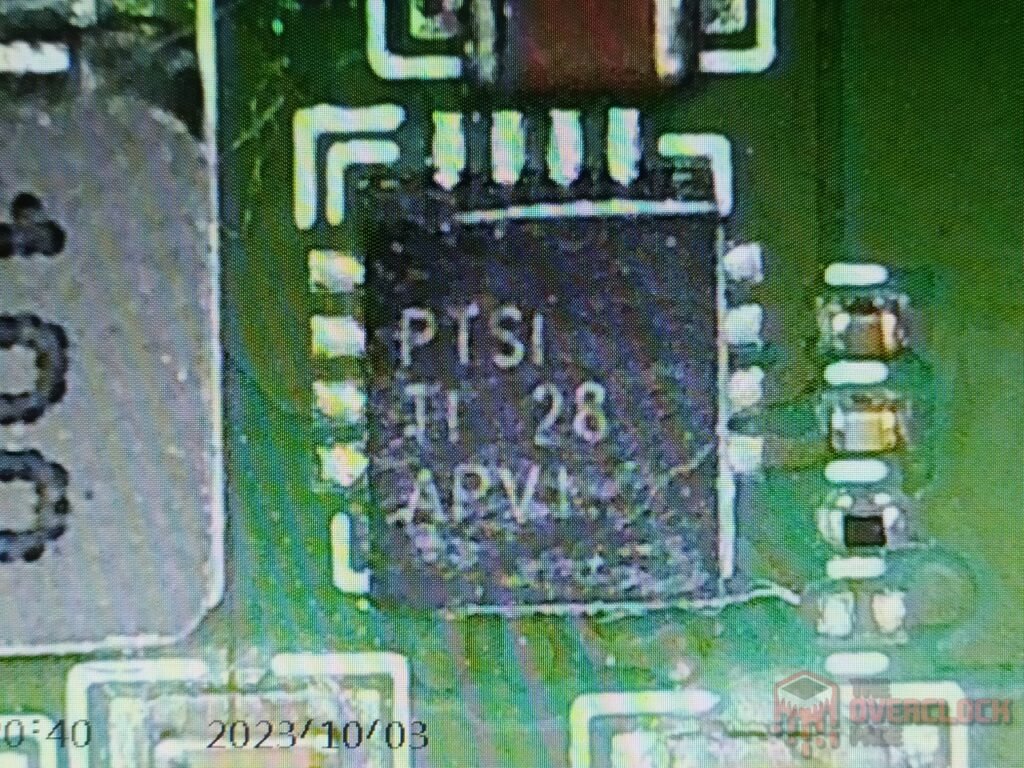

Another IC we find in this SSD is marked as “PTSI TI,” which is made by Texas Instruments.

It’s a Buck Converter with the model TPS62130, capable of handling input voltages ranging from 3V to 17V while providing currents up to 3A. It has a thermal resistance of up to 4.5 ºC/W.

We also see another interesting IC marked as “4B2DC2,” which belongs to Renesas and acts as a temperature sensor commonly used in DDR4 modules in SSDs.

Power-Loss-Protection

Power Loss Protection in Datacenter SSDs is crucial to ensure data integrity during power failures. By using capacitors or temporary energy storage, it enables the safe completion of ongoing operations, preventing data corruption in critical environments. This ensures high data availability and reliability.

In this drive, we can see that it has a huge electrolytic capacitor from the manufacturer Nichicon, which produces various integrated circuits like this. Nichicon is a Japanese manufacturer and is one of the best capacitor manufacturers in the world.

We can see that this electrolytic capacitor can work with voltages up to 35V, providing a capacitance of 1800?F and can operate at temperatures of up to 125ºC. Through some calculations, we can see that the SSD should be able to retain enough energy for about 10 ms (milliseconds) to transfer the data from the DRAM Cache to the NAND Flash.

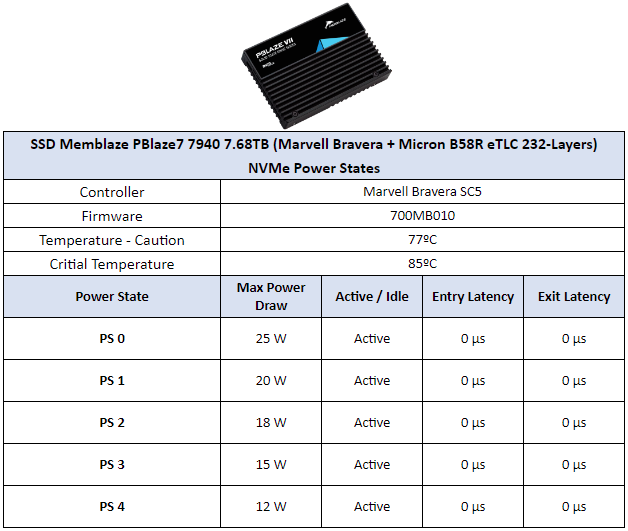

SSD Power States

As mentioned in previous sections regarding power consumption, in this segment, we will delve further into the power states of this SSD.

One difference we can observe in this enterprise/DC SSD compared to the consumer market is that NVMe SSDs usually have dozens of Power States, typically featuring five primary states. In consumer SSDs, you often see three active and two idle states, but in this case, it features five active power states with varying consumption based on specific workloads.

CURIOSITIES ABOUT SSD MEMBLAZE PBLAZE7 7940

Just like integrated circuits on a RAM module can vary, the same can happen with SSDs, where there are cases of component changes like controllers and NAND flash chips.

Memblaze offers two versions of this product for consumers, with two different segments, which means a total of four versions. We have the 7940 series with capacities of 3.84TB, 7.68TB, and 15.36TB, all of which come with a 14.5% over-provisioning volume.

However, it also offers other models marked as 7946, which have slightly better performance with capacities of 3.2TB, 6.4TB, and 12.8TB, providing a larger over-provisioning volume of approximately 37.4%. These models also have greater durability, rated at 3 DWPD compared to the 1 DWPD of the 7940 series.

TEST BENCH

– Operating System: Windows Server 2019 64-bit (Build: 22H2)

– CPU: Intel Core i7-13700K (8C/8T) – All Core 5.7GHz – (Hyper-threading and E-cores disabled)

– RAM: 2 × 16 GB DDR4-3200MHz CL-16 Netac (w/ XMP)

– Motherboard: MSI PRO Z790-P WIFI DDR4 (Bios Rev: 7E06v18)

– GPU: Raptor Lake UHD Graphics 770

– SSD (OS): SSD IronWolf 125 1TB (Firmware: SU3SC011)

– SSD DUT: SSD Memblaze Pblaze7 7940 7.68TB (Firmware: 700MB010)

– Intel Z790 Chipset driver: 10.1.19376.8374.

– Quarch PPM QTL1999 – Used for power measurement.

ADAPTORS

As it is a U.2 interface SSD, consumer PCs do not have the required interface to connect them, so an adapter from U.2 to PCIe is needed. In this case, you are using a PCIe 5.0 x4 NVMe adapter.

The only problem is finding adapters like this for SSDs as fast as the ones tested, because U.2 to PCIe 3.0 adapters are quite affordable, often under $200-$300. However, these Gen 5.0 adapters can cost more than $700, not including the SSD itself.

TESTING METHODOLOGY

Because this is a server-oriented SSD, it doesn’t make sense to test it using benchmarks intended for consumer drives. Instead, we will use our test suite for Datacenter/Enterprise SSDs.

Here’s the testing process:

- Multiple Secure Erase operations are performed at the end of each benchmark.

- To prepare the SSD, data is sequentially written to at least 2x the drive’s capacity before logging.

- Pre-conditioning of the SSD is performed with a high queue depth workload specific to each benchmark before logging.

- Once the SSD is ready, data is written for 5 minutes to monitor performance.

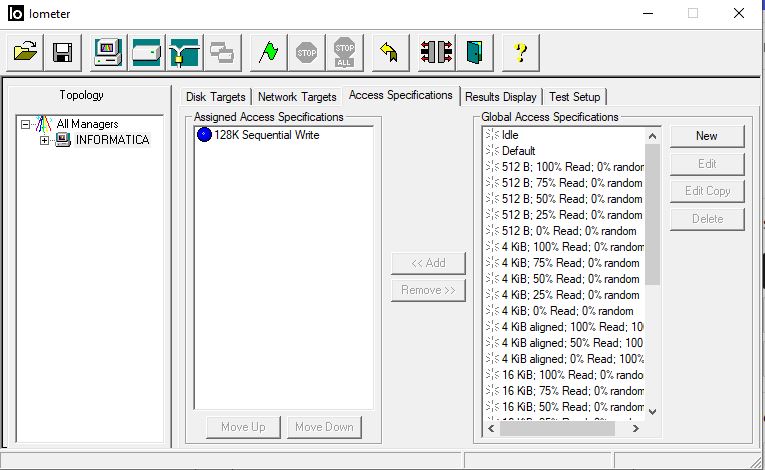

- The software used is IOmeter 1.1.

WHERE TO BUY

So far we haven’t been able to find this drive anywhere

IOmeter – Sequential and Random Performance

Sequential: Blocks 128 KiB 256 Queues 1 Thread

Random: Blocks 4 KiB 32 Queue 1Threads (100% Aligned – Write / Read)

Before measuring its speeds, the SSD needs to be “prepared” or pre-conditioned, so that the performance it provides is consistent. SSDs have three states: Fresh-out-of-Box (FOB), the Transitioning state, and the Steady State.

FOB represents the state as soon as the SSD begins to be tested with any workload. Transition, as the name suggests, is the state of change until it reaches the Steady State. Steady State is the state of continuous performance where the SSD’s performance remains consistent under the workload; it will continue in this state continuously.

Here’s a simple analogy to grasp these three states: Picture yourself as a marathon runner. At the race’s beginning, you go full throttle, reaching your maximum speed. However, sustaining that pace throughout the entire course is physically impossible. Let’s say that during this peak moment at the beginning of the race, you manage to average 15 km/h. Maintaining a constant pace throughout the journey is impossible, so as you progress along the route, you begin to lose speed.

The moment when your speed begins to fluctuate would be the Transitioning state. However, shortly after you start to stabilize, you would settle into a speed that you can maintain until the end of the race, and this would be the Steady State.

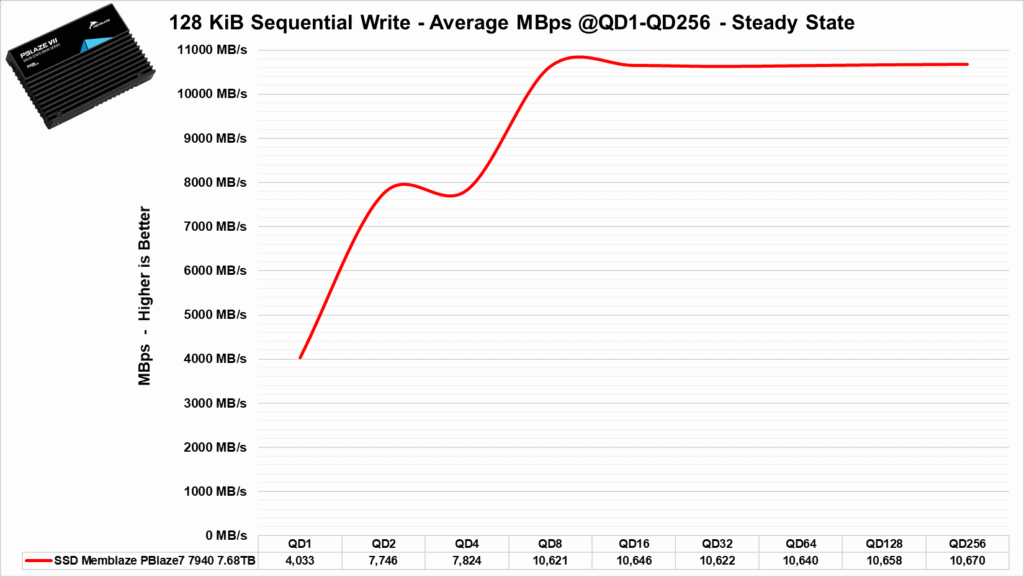

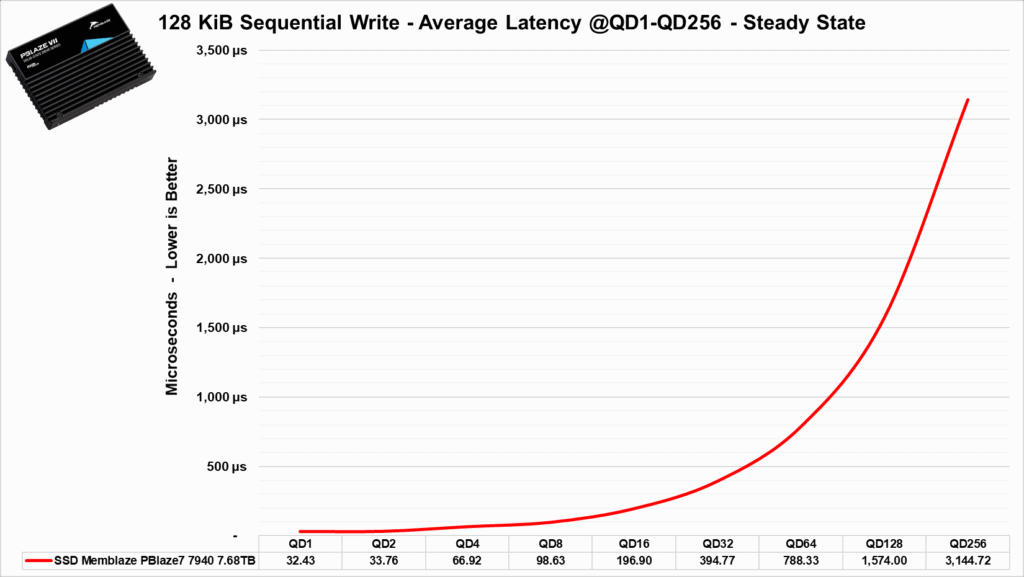

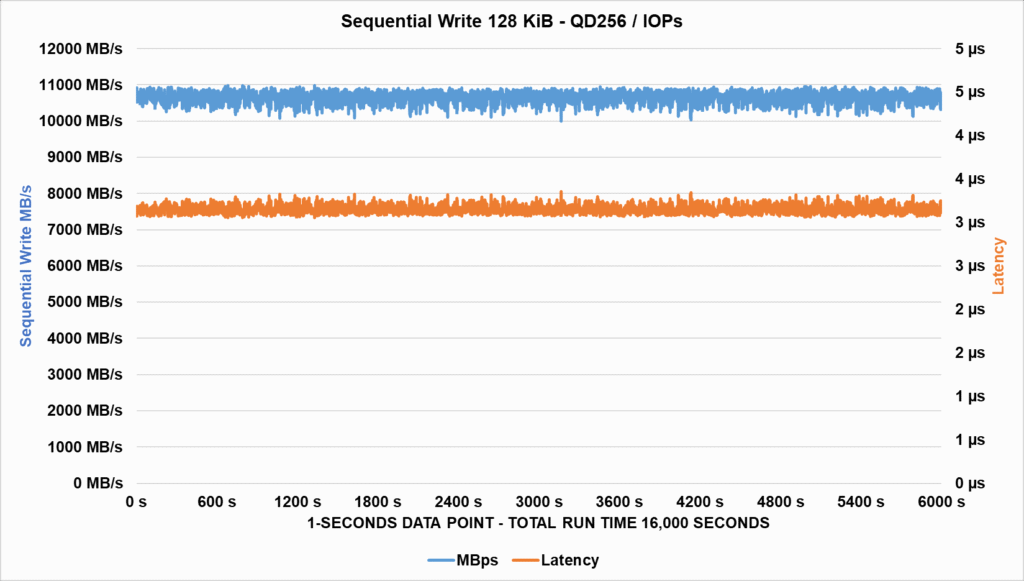

Starting with a sequential write test using 128 KiB blocks, we can see that the speed is quite constant throughout the test. As mentioned, these types of SSDs do not have an SLC cache. The latencies also showed good results in this case.

Testing its sequential speeds at multiple queue depths, we can see that the SSD reaches its speed of 10 GB/s at QD8 and maintains it up to QD256. At lower queue depths, such as 1 to 4, it ranges from 4 GB/s to 7 GB/s.

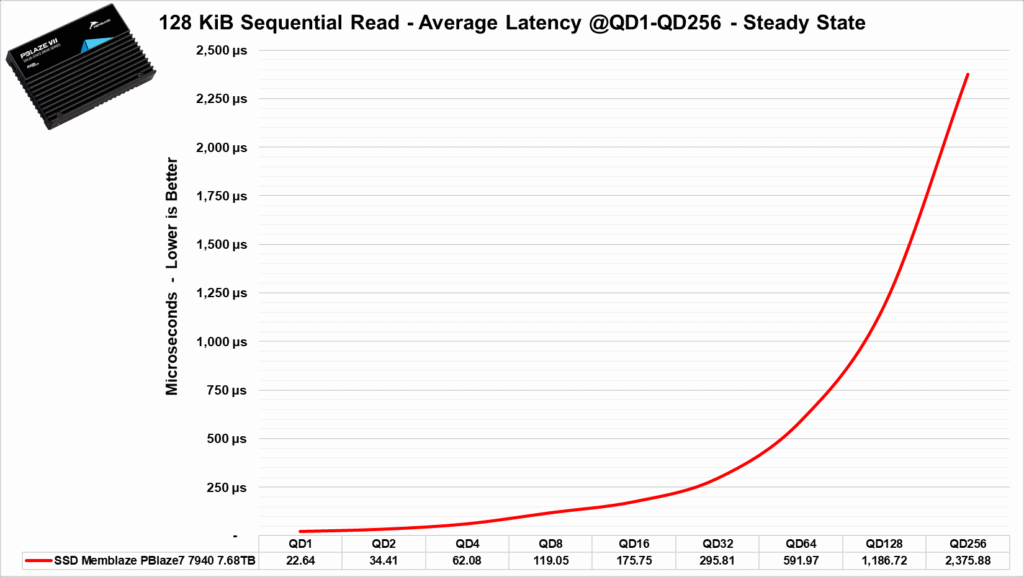

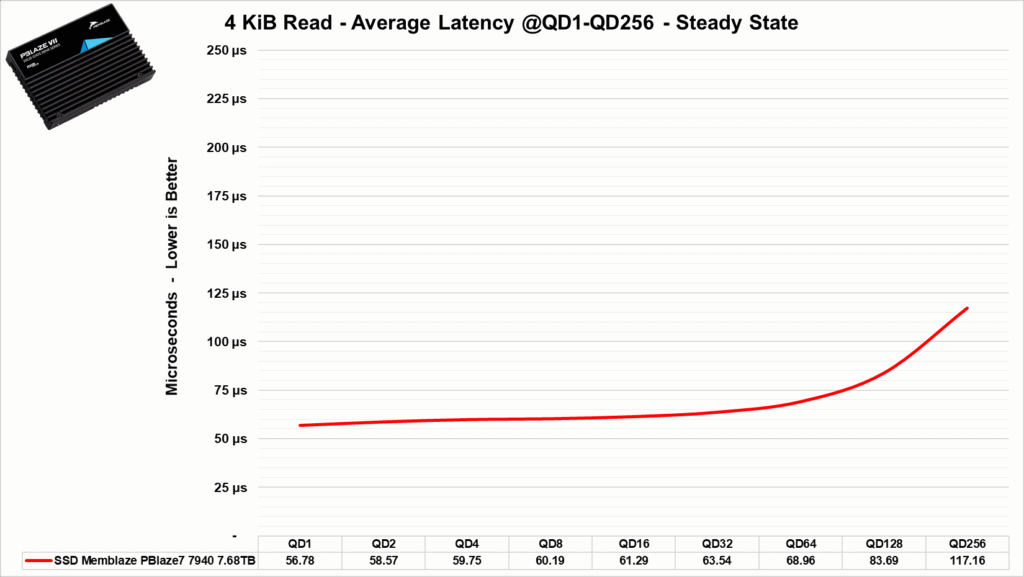

As for its latencies, we can observe that it showed incredible results, with latencies below 100 µs at QD from 1 to 8. Only when the QD increased beyond 128 did its latency shoot up.

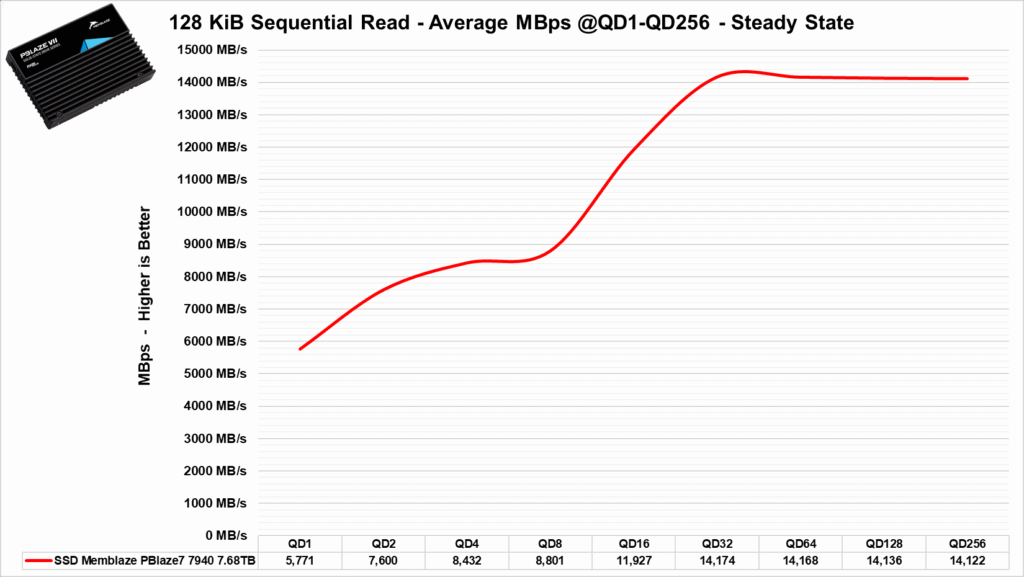

In its read speeds, it manages to reach its described speeds of 14 GB/s only at a QD of 32 and above. Below that, from 1 to 16, it ranges from 5.7 GB/s to 12 GB/s.

In terms of latencies, it reaches around 120µs at QD8, which is excellent performance. However, at higher queue depths, such as 256, the latency increases to milliseconds, not microseconds.

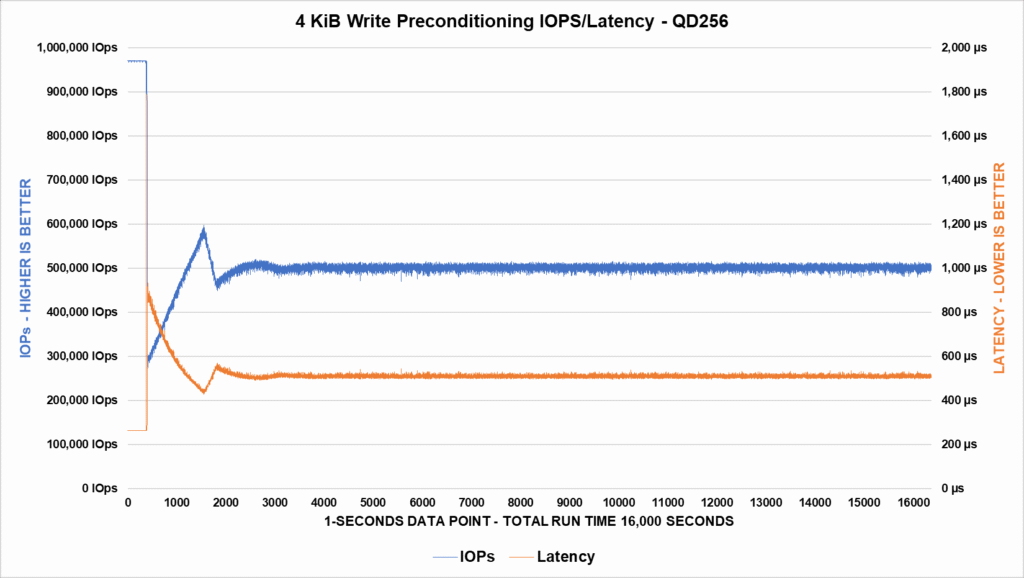

Now, we will precondition the SSD to perform benchmarks in 4 KiB.

We can see that the SSD starts with nearly 1 million IOPS in its FOB state, but then drops dramatically and then rises again until it stabilizes after 3000 seconds. This period is the transition period, and from then until the end of the benchmark is the Steady State.

We can also see that this is the same behavior for its latencies.

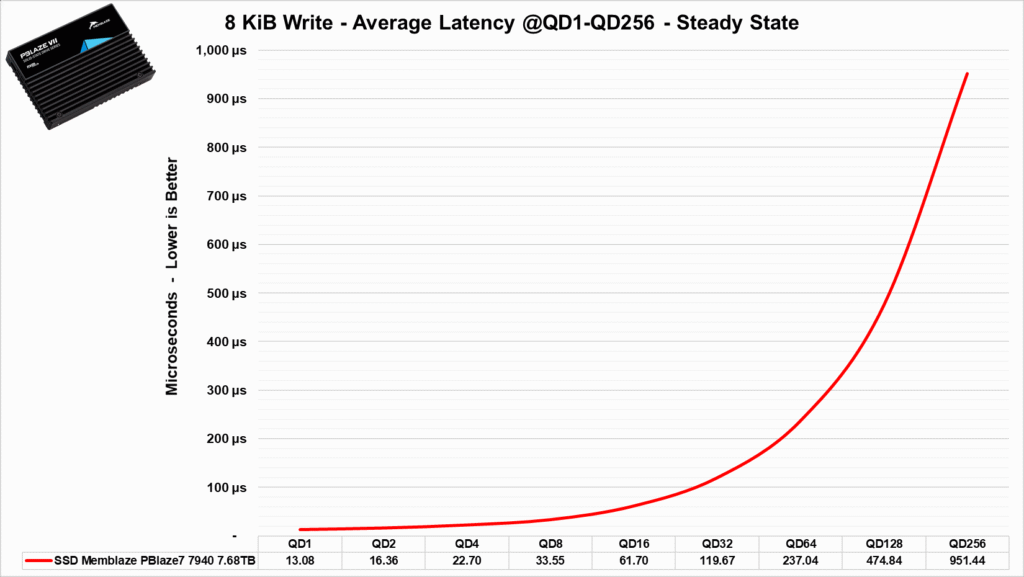

After completing preconditioning, we move on to the write test.

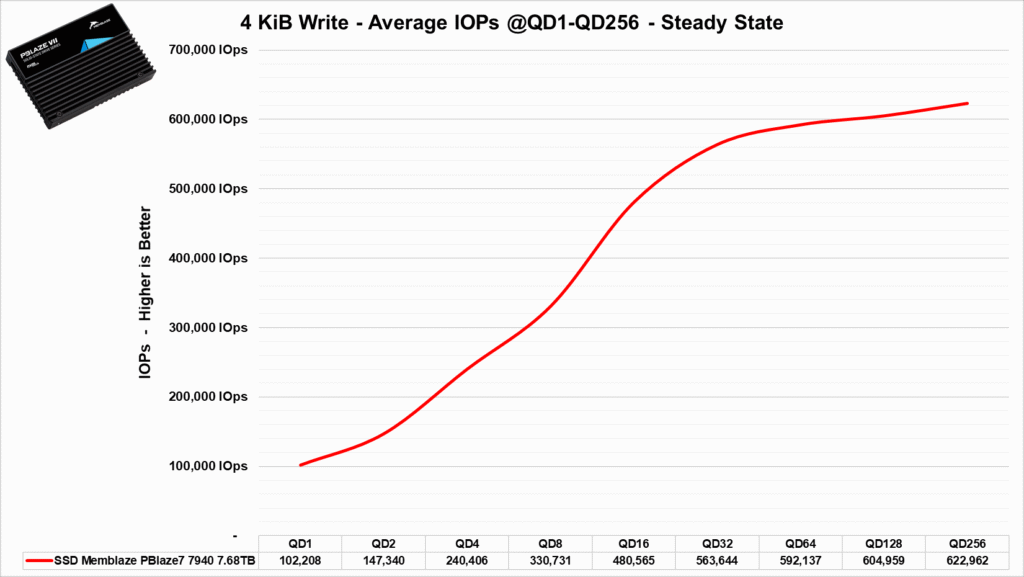

We can see that at a QD of 256 in the Steady State, it delivers a performance of over 600K IOPS, which is well above the manufacturer’s claim of 400K IOPS. It scales remarkably well from a QD of 1 up to QD 256.

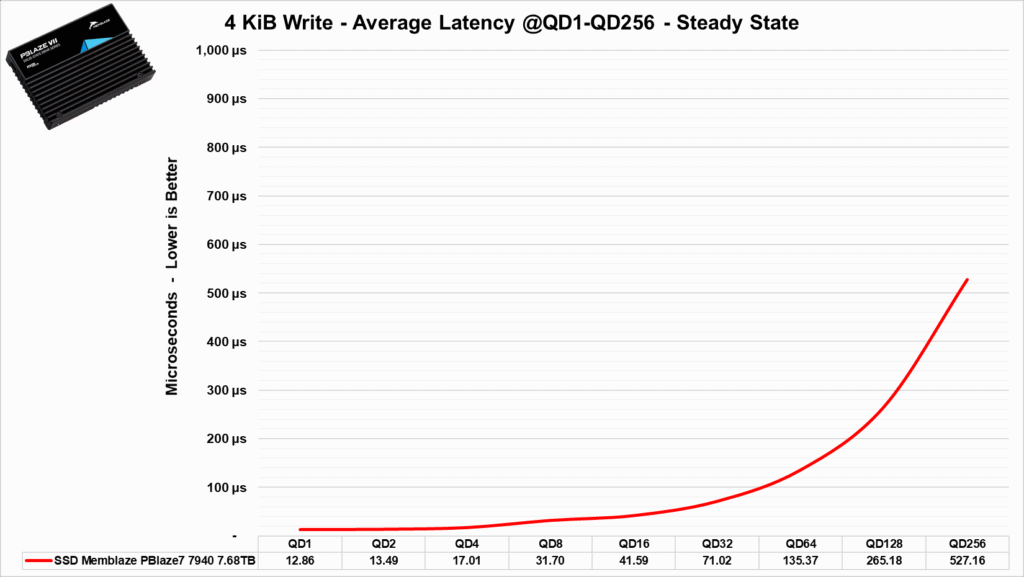

In terms of latency, we see the same pattern – extremely low latencies from QD 1 to QD 32, with a more significant increase only at a QD of 128 and above.

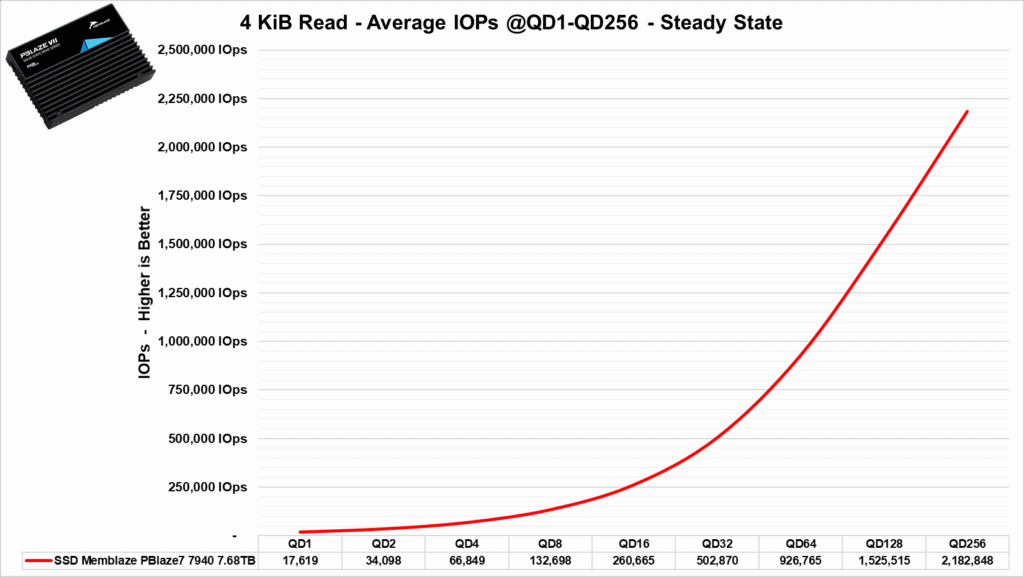

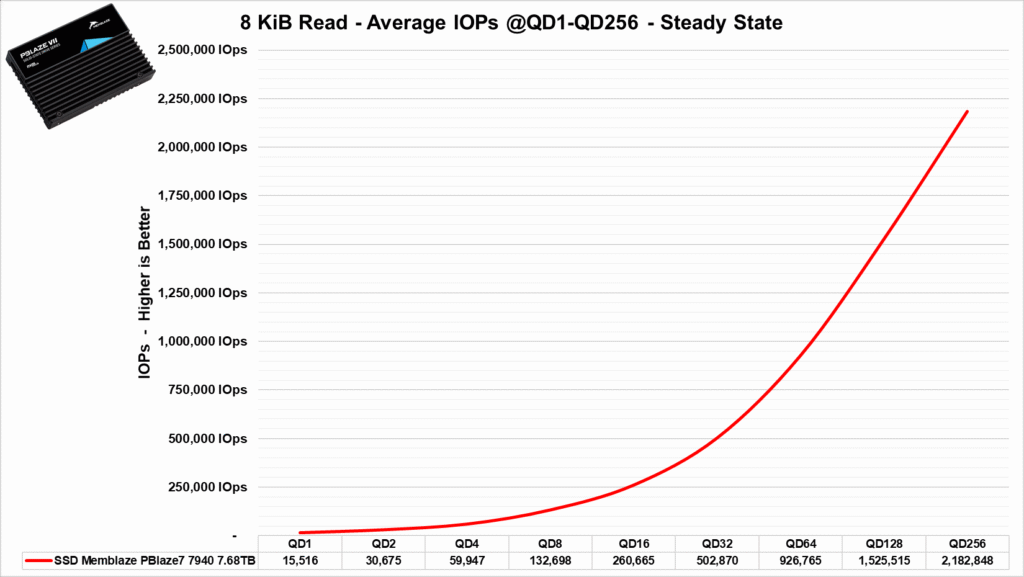

In the case of read performance, the SSD achieves approximately 2.18 million IOPS, which is slightly below the manufacturer’s claim of 2.8 million IOPS. This discrepancy may be due to differences in the testing environment; the manufacturer likely tested it on a Linux operating system. However, when tested with QD512, the SSD reaches the advertised 2.8 million IOPS.

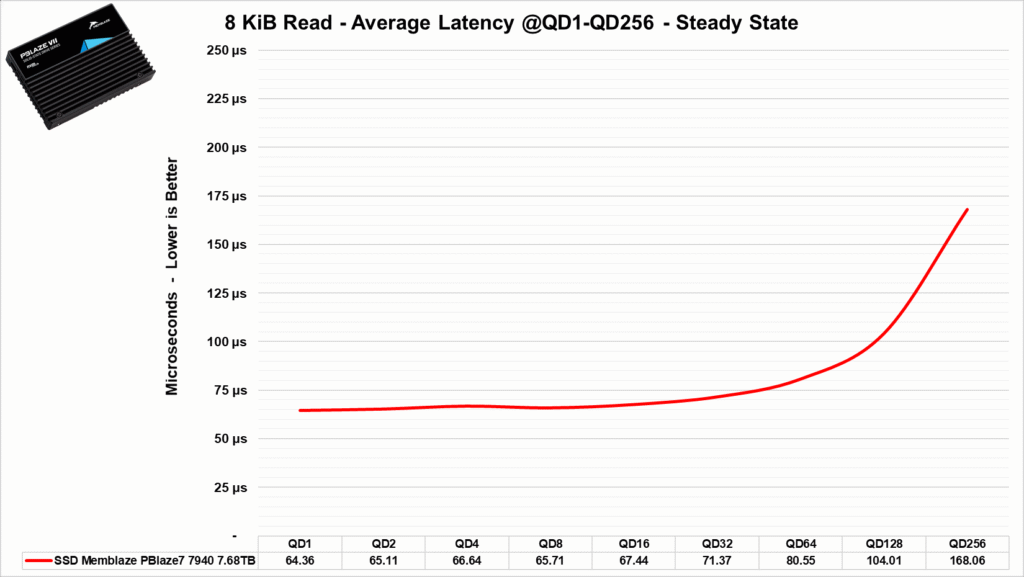

In the case of read performance, the SSD was even more impressive. Although its latency increased with the rising queue depth, the difference was quite small when compared to its write performance.

Benchmark: 4 KiB 70% Read 30% Write

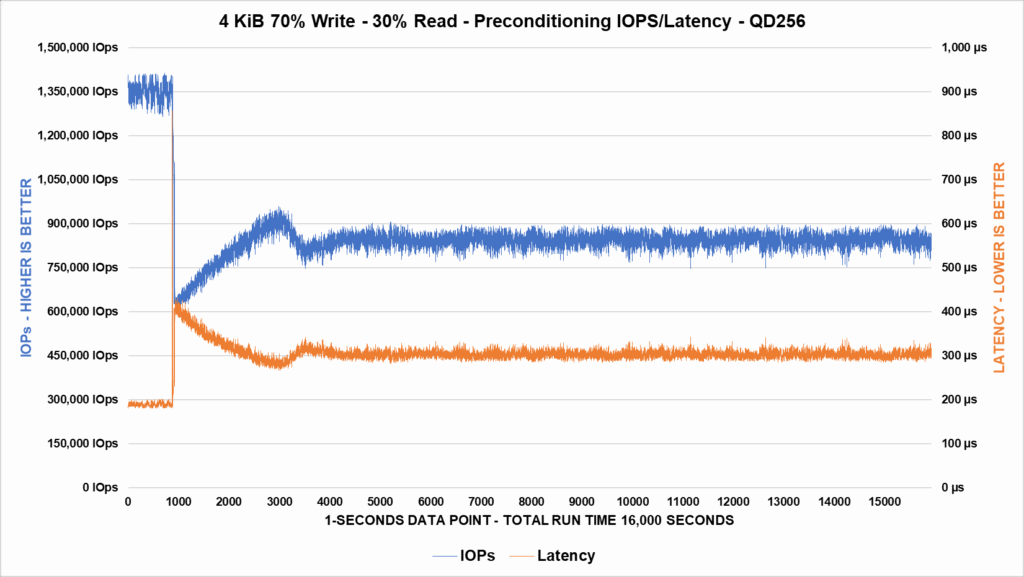

One of the most widely used metrics in SSD benchmarks, representing multiple uses in various servers and data centers. In this benchmark, we will run the test for over 16,000 seconds to see how the SSD performs.

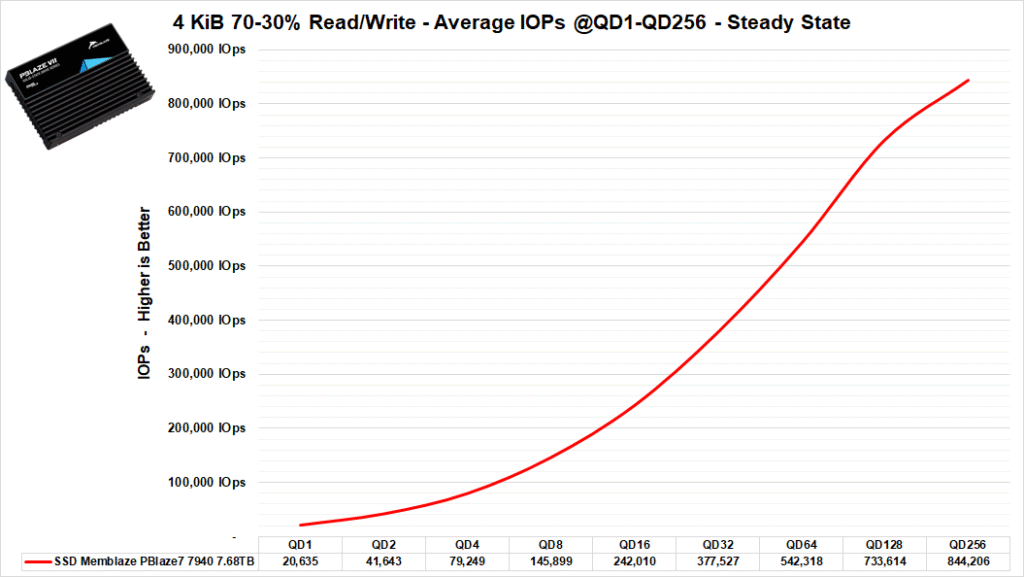

We can see that the SSD starts strong with over 1.35 million IOPS, but it soon drops and stabilizes, reaching the Steady State with around 820,000 IOPS after 5000 seconds.

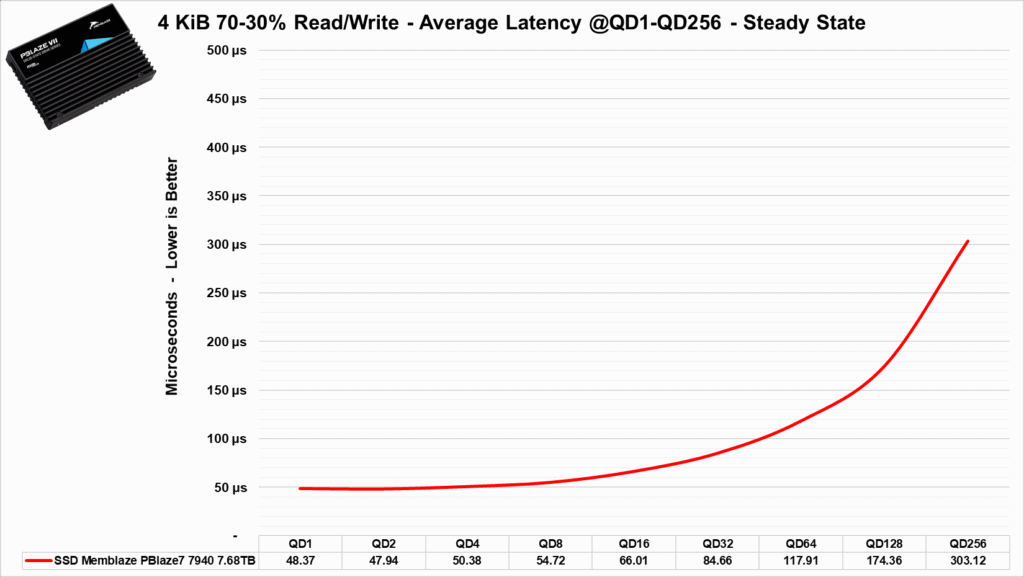

In terms of latency, it took the same amount of time to reach the Steady State.

In this test, we can see that Memblaze delivers excellent results up to a queue depth (QD) of 8 for this combination of controller and NAND flash. As for its latencies, we can observe that they remain quite stable up to QD64.

Random Read and Write 8 KiB

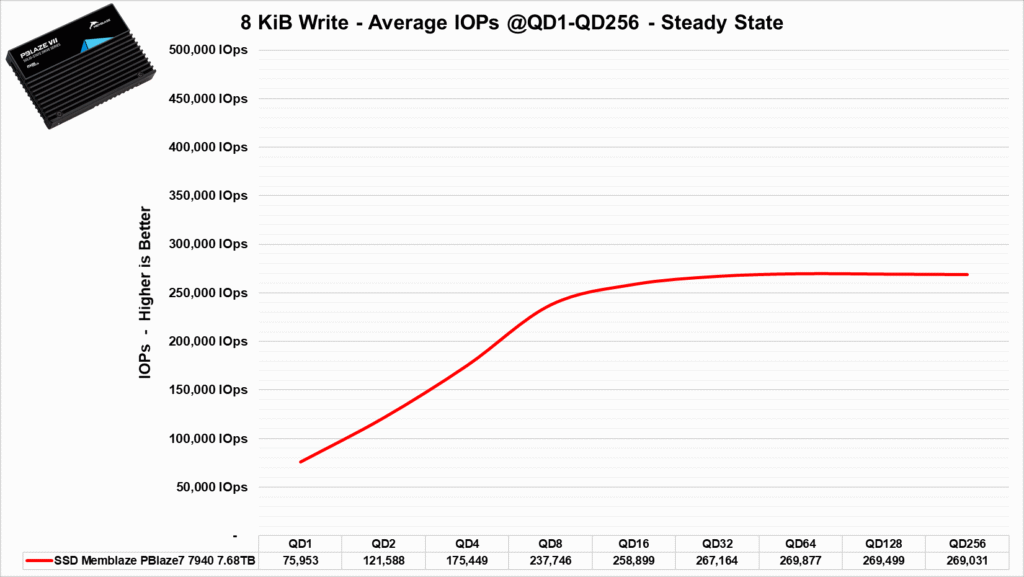

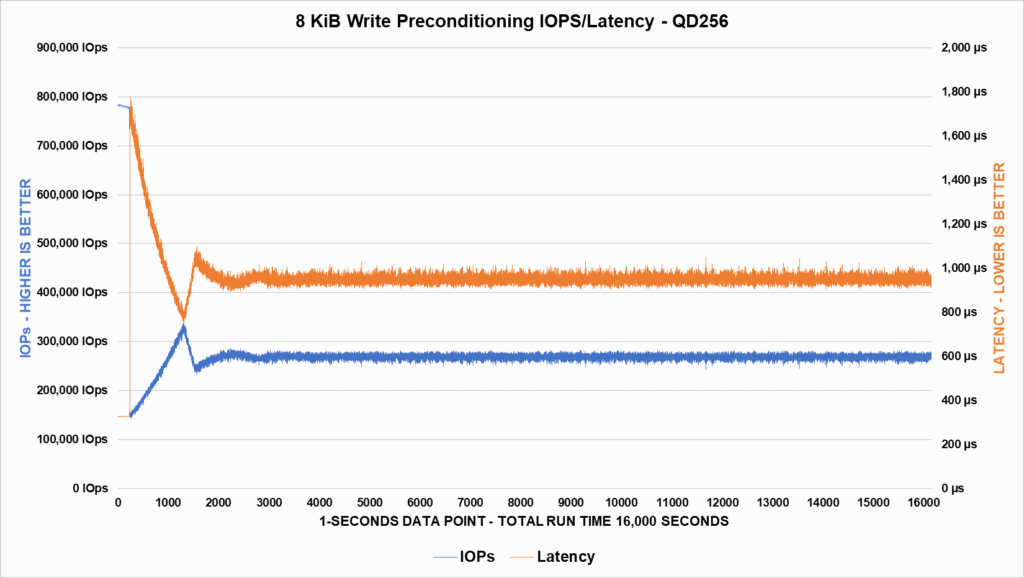

In the benchmark tests with 8 KiB blocks, which are widely used in virtualized environments and OLTP (Online Transaction Processing) scenarios, this Memblaze SSD exhibits exceptionally consistent performance, maintaining high IOPs with low latencies. It is a solid choice for online transaction workloads and virtualized environments.

During the 16,000 seconds of plotting, we can see that the SSD reaches a steady-state point for both latency and bandwidth (IOPS) near the 3,000-second mark. After that, the SSD maintains an average performance of over 270K IOPs while its latency remains below 1000µs. This makes it a reliable choice for applications with demanding online transaction workloads.

It’s clear that in terms of IOPS bandwidth, it starts at around 75K IOPS at QD1 and increases as the queue depth (QD) grows, stabilizing at its peak performance around QD16. From QD16 to 256, the SSD maintains a range between 260K and 270K IOPs. This kind of performance is quite impressive, especially at higher queue depths.

The latency behavior of this SSD does show a different pattern. While its bandwidth stabilizes at QD16 and higher, its latency increases significantly. However, when comparing this SSD to other competing models, it still stands out favorably in terms of latency, showcasing strong overall performance.

In its reading, on the other hand, the bandwidth increased significantly, surpassing 2.1 million IOPS. Its latency was more stable in this benchmark, remaining constant up to QD64 and only increasing slightly in higher QD.

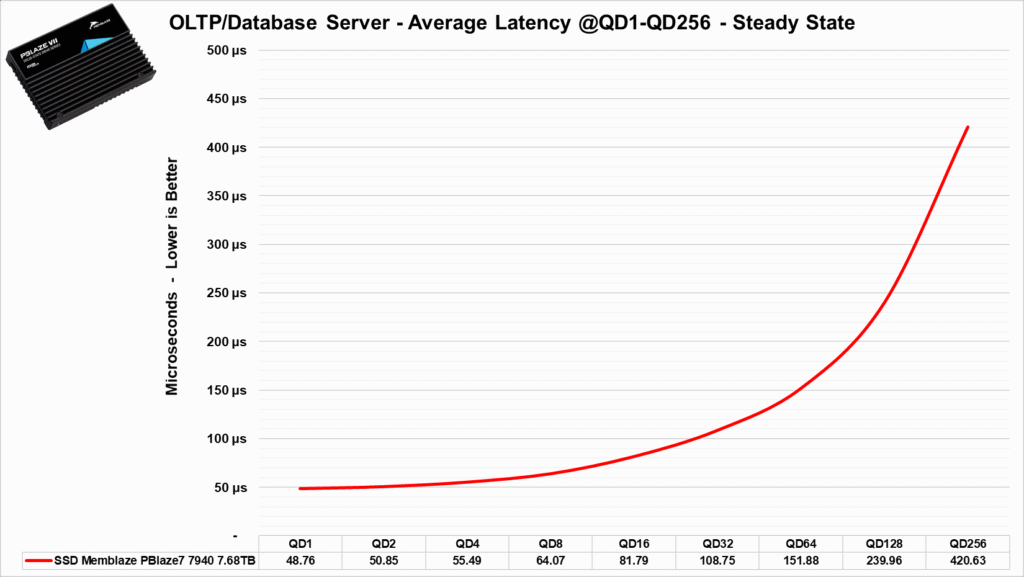

Benchmark: OLTP Server Workload

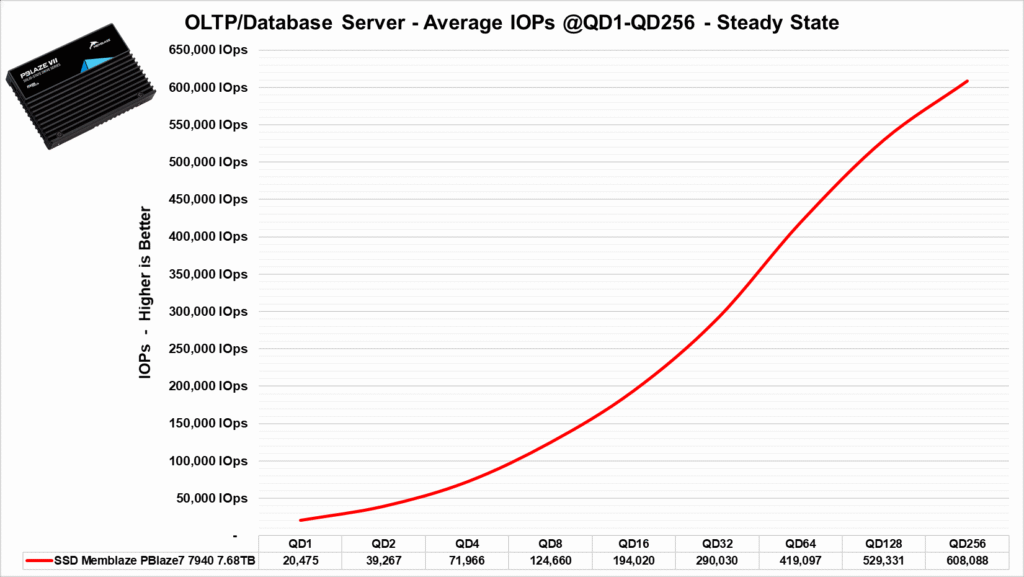

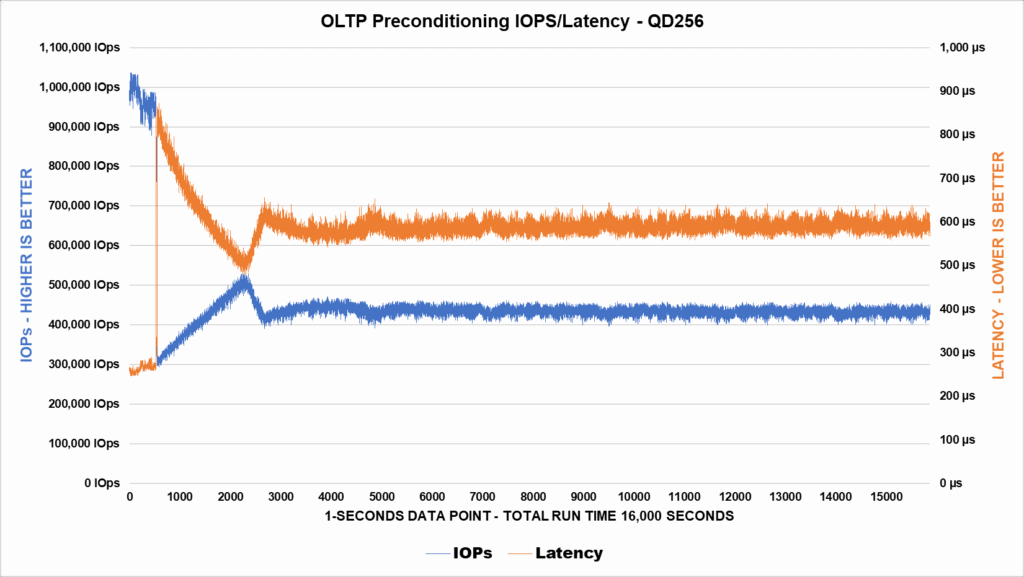

This benchmark, we will replicate a typical workload found in servers that process banking transactions or other online shopping environments that handle transactions, as well as in high–frequency trading (HFT) environments, which involve the high-speed buying and selling of financial assets such as stocks, bonds, currencies, and commodities with a large volume of operations.

As we conduct the SSD preconditioning, we can see that it stabilizes (Steady State) after approximately 5,000 seconds of the benchmark. During this time, its bandwidth stabilizes at a level above 400K IOPS, while its latency stabilizes at around 550µs.

We can observe that it can provide more than 600K IOPS at QD256 for larger servers with a large processing queue, while at lower QDs, it still delivers good performance that we will compare with other SSDs in the future.

In its latencies, we see something phenomenal. It manages to offer very low latencies up to QD16. Afterward, it increases slightly but still remains below latencies observed in other SSDs on the market, which I hope to discuss soon.

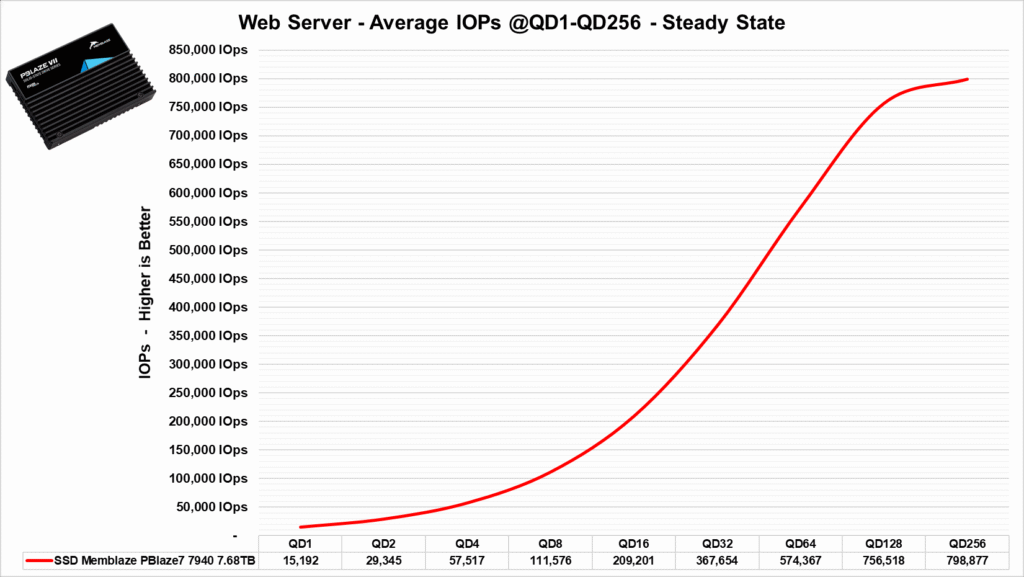

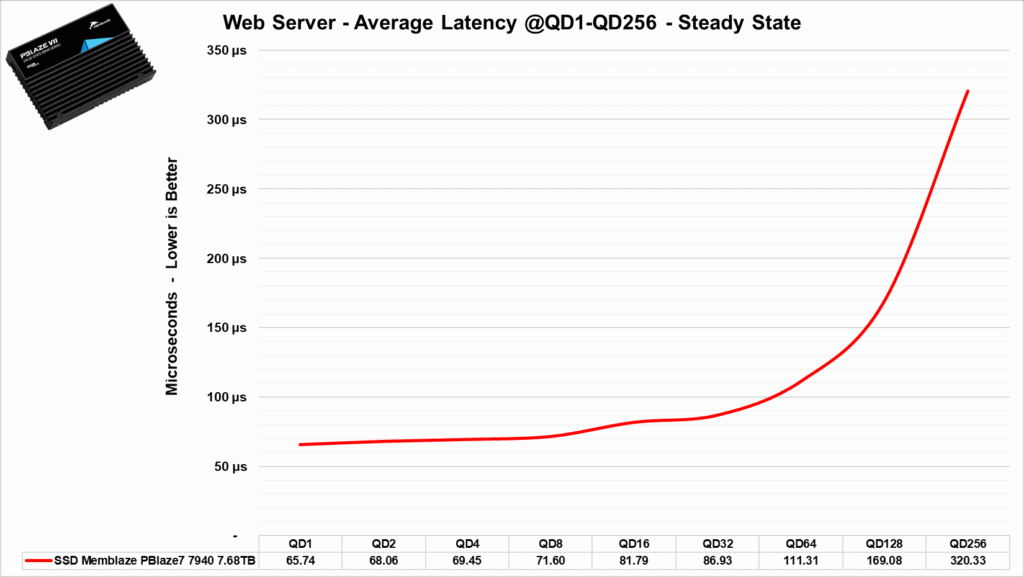

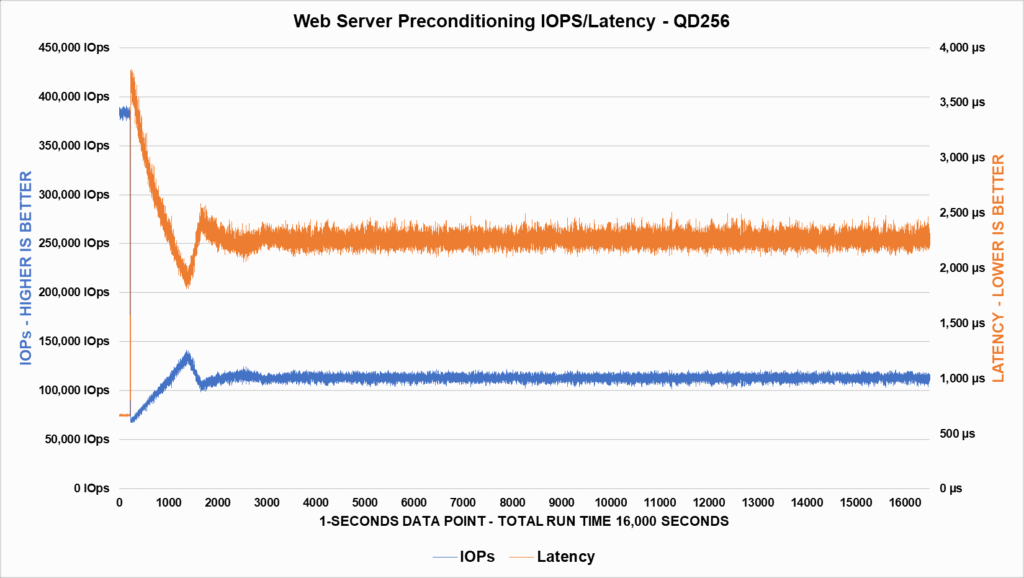

Benchmark: Web Server Workload

In this test, a typical workload found on “web servers” was simulated, which often involves working with different file sizes and block sizes, ranging from 512 bytes to 512KB. Additionally, various types of access, including Read and Write, as well as a mix of both at different percentages, were tested.

In this scenario, it takes approximately 3000 seconds to reach the Steady State, with latencies stabilizing around 2.3ms and a bandwidth in the range of 110K IOPs.

We can see that for this type of workload, this SSD has proven to be very capable because it managed to deliver almost 800K IOPs at QD256, although depending on the email server size, its workload should not be greater than QD128. Nevertheless, it still provides good results at lower QD levels as well.

The same can be said about its latencies; we can see that they remain quite low at lower QD levels and only increase at higher QD levels, such as 256. However, this is mainly due to its high bandwidth, which also contributes to its excellent performance.

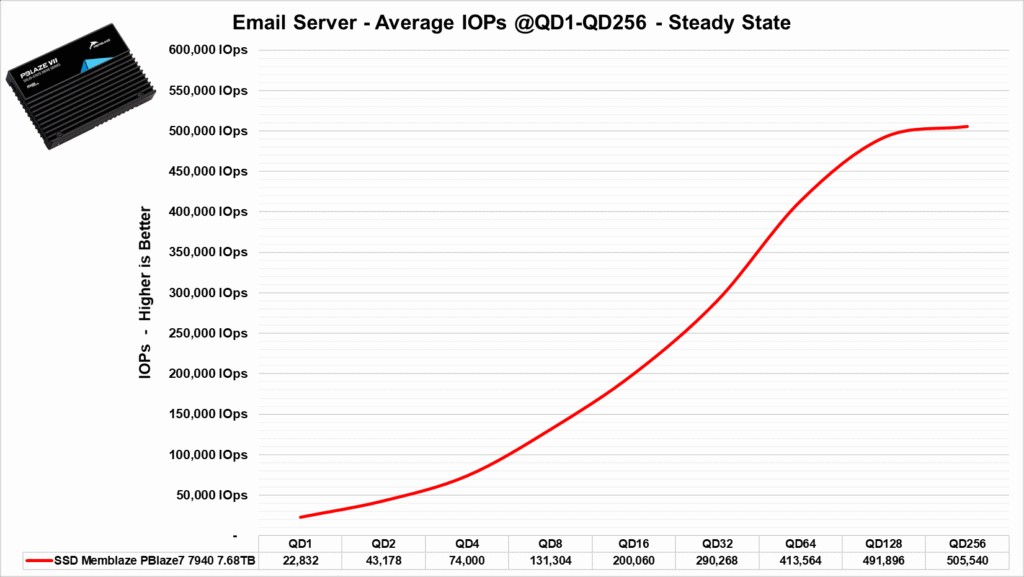

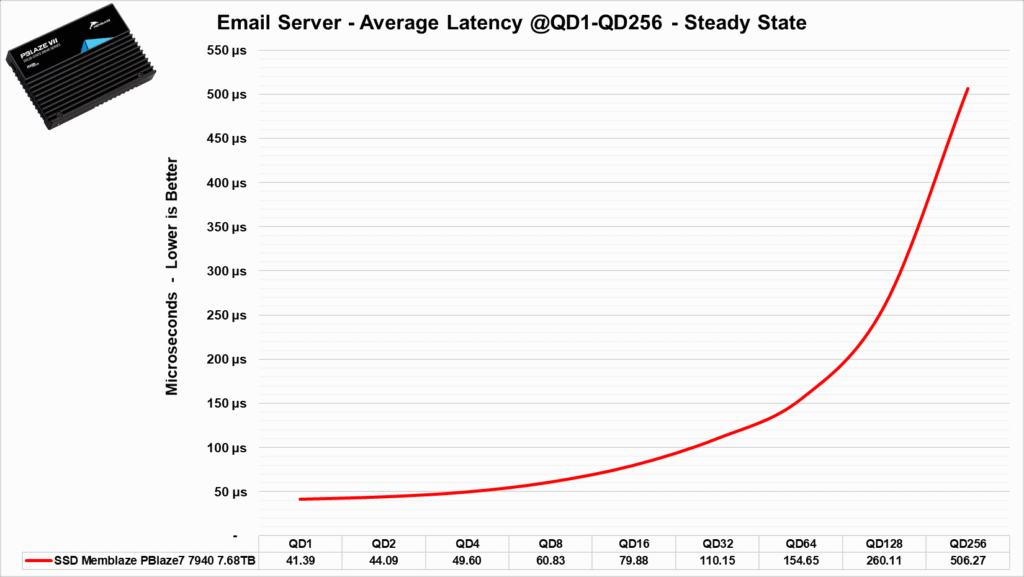

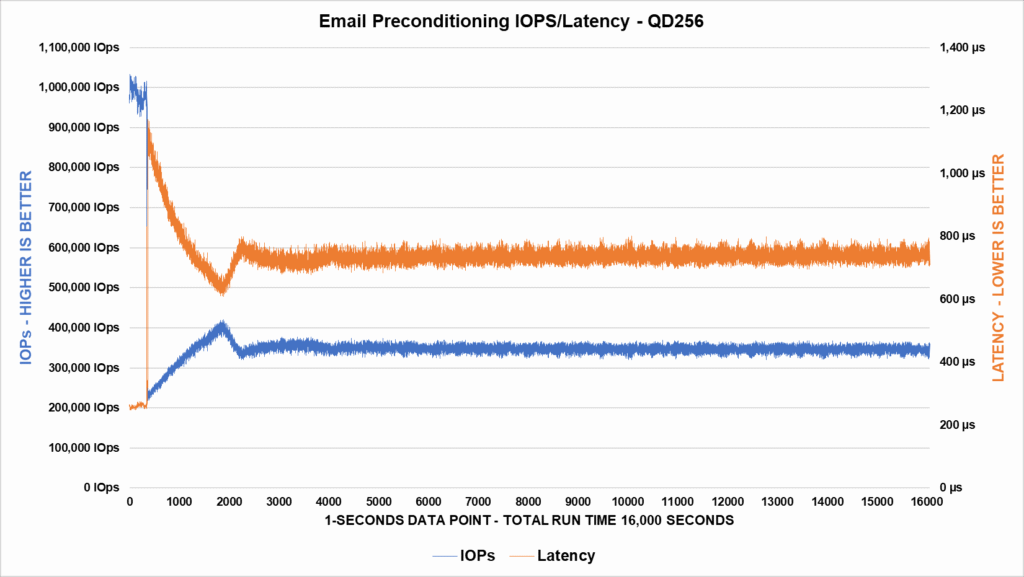

Benchmark: Email Server Workload

In this part of the analysis, we will use a typical workload found on email servers, which is traditionally known to work with 8 KiB block sizes with a 50% / 50% distribution (Random Read/Write). It is also considered a more demanding scenario for writing to the device.

We can observe that during this preparation, the SSD took a little over 4000 seconds to reach the Steady State, where it was able to maintain a consistent performance in the range of 350K IOPs with latencies close to 800µs.

In this other benchmark, starting with its bandwidth, we again see decent results at small QDs, although I would have liked them to be a bit higher. However, at larger QDs like 128 and 256, it excels and provides incredible results.

Now, in terms of latency, they were excellent, especially at small QDs.

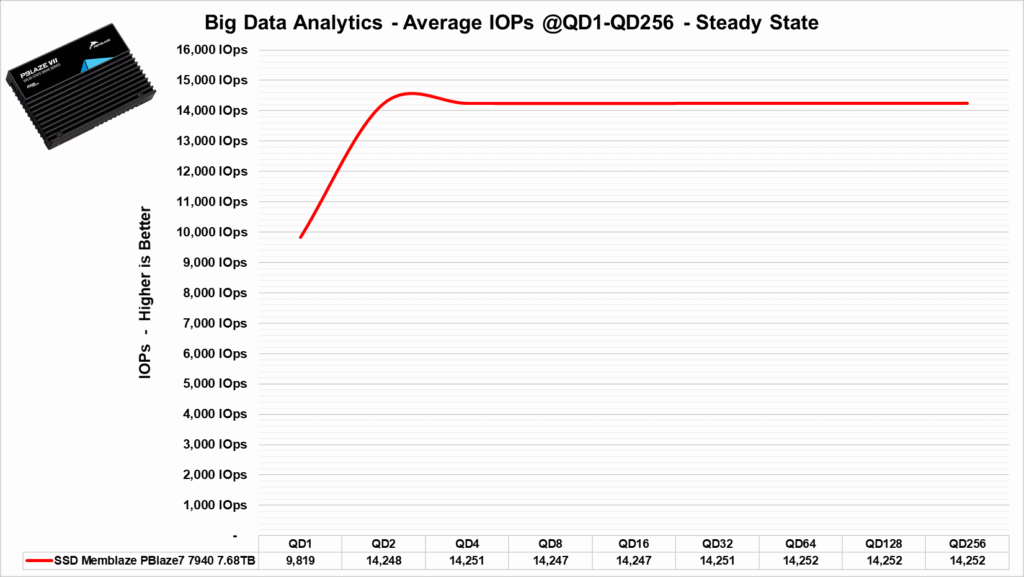

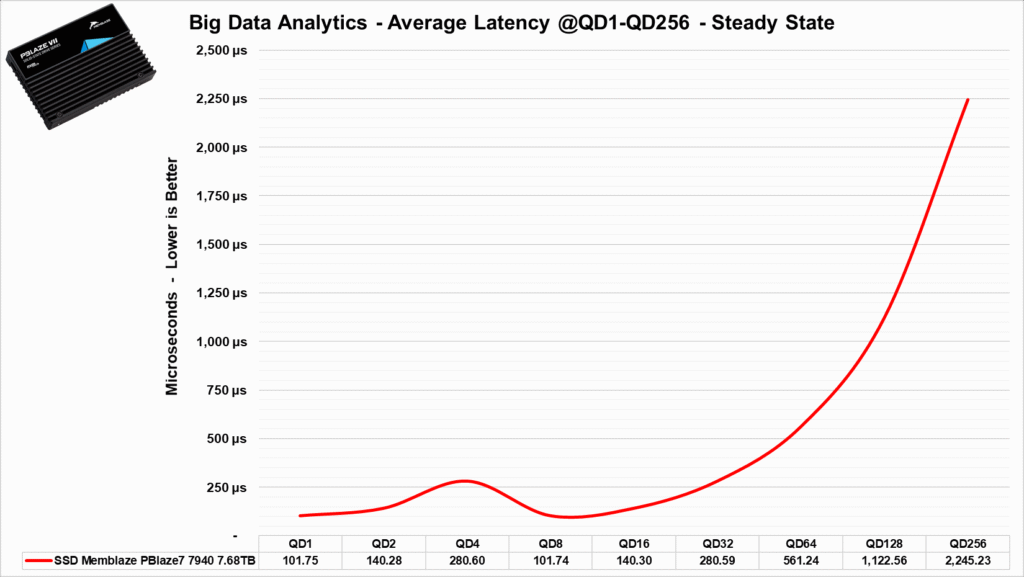

Benchmark: Big Data Analytics Workload

In this section of our review, we will simulate a data workload found in the industry, but what is BDA? Big data analytics is the process of examining and extracting valuable insights from massive and complex datasets to make informed decisions and improve business performance.

We can observe that throughout most of the bandwidth test, with the exception of its beginning at QD1 (Queue Depth 1), the SSD manages to deliver a quite satisfactory and consistent performance.

In the latency test, the SSD behaves in a stable manner, with latency increases only as the Queue Depth (QD) exponentially rises.

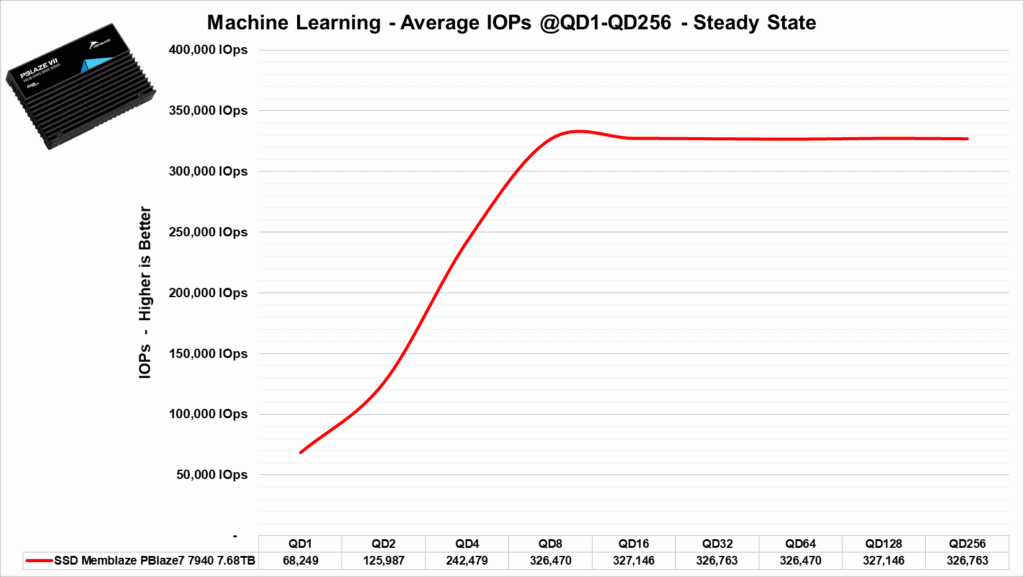

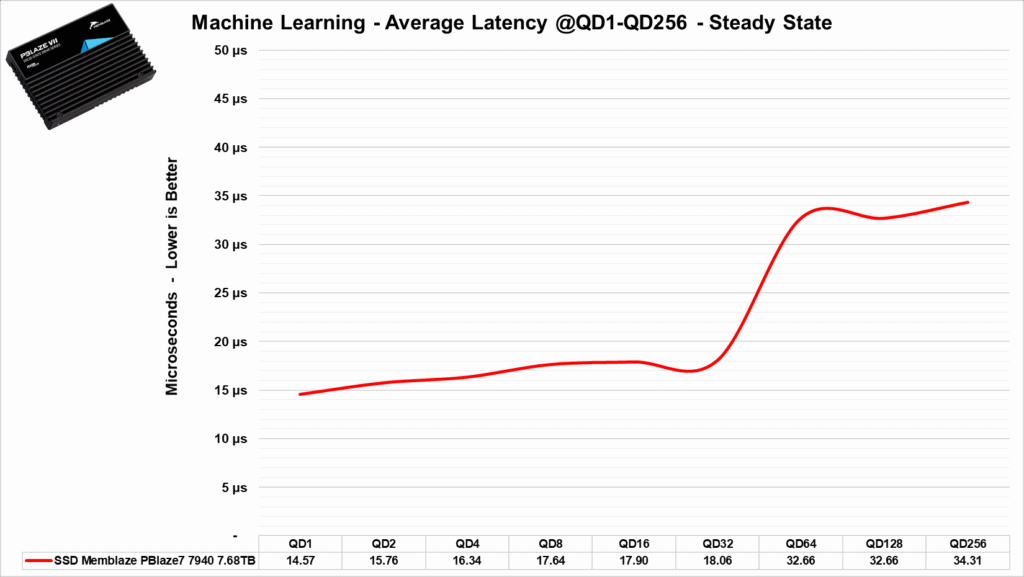

Benchmark: Machine Learning Workload

Machine Learning is a subfield of artificial intelligence that focuses on the development of algorithms and models that enable systems to learn and improve from data without explicit programming. This approach allows machines to identify patterns, make predictions, and make decisions based on acquired information, becoming a valuable tool in a variety of applications, from product recommendations to medical diagnostics.

In this benchmark where we used 32 KiB blocks for random reads, we can see that it manages to reach a peak of almost 330K IOPs starting from QD8, which was a satisfactory result. However, its performance remained largely the same beyond that point.

Its latencies were also good, maintaining a stable result with only a more noticeable increase from QD 64 to 256.

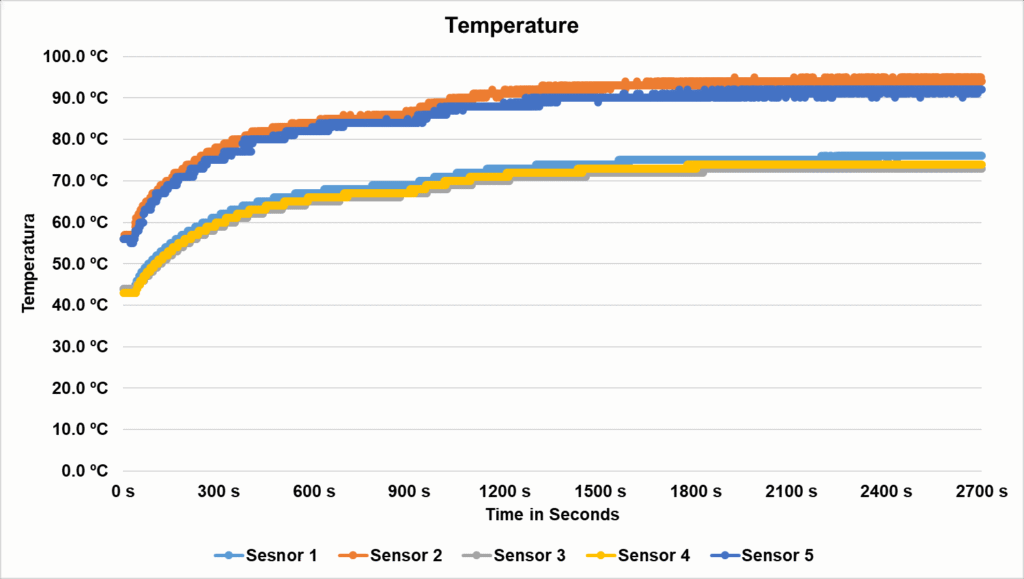

TEMPERATURE STRESS TEST

In this section of the analysis, we will monitor the SSD’s temperature during a stress test, where the SSD continuously receives files. This will help us determine if there was any thermal throttling with its internal components that could lead to bottlenecks or performance loss.

As seen in the chart, some of the SSD’s sensors presents very high temperatures above 96ºC, but from what we’ve gathering the main controller didn’t go above 80ºC, and did not suffer any thermal-throttling but the exterior housing of the SSD was warm to the touch.

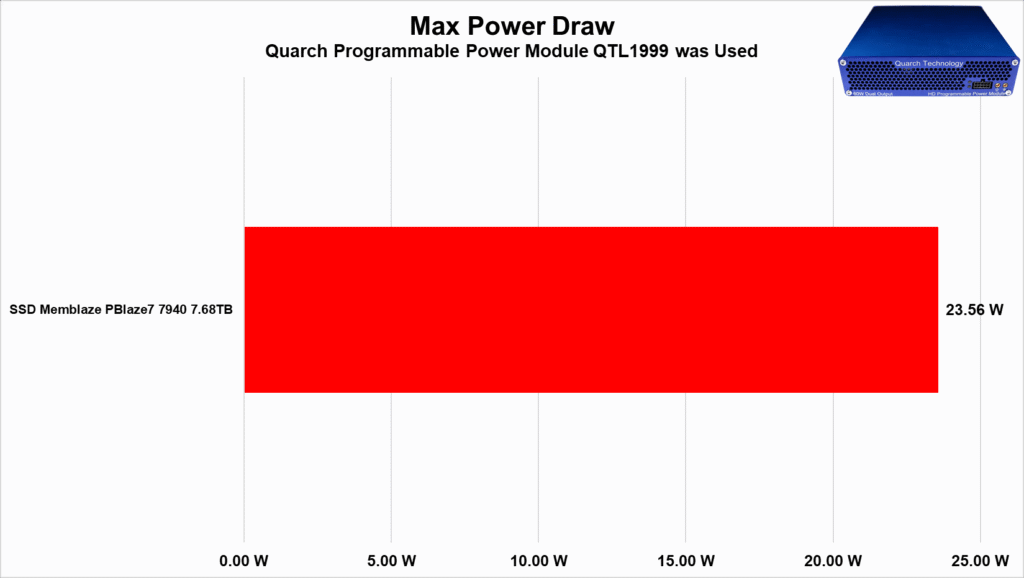

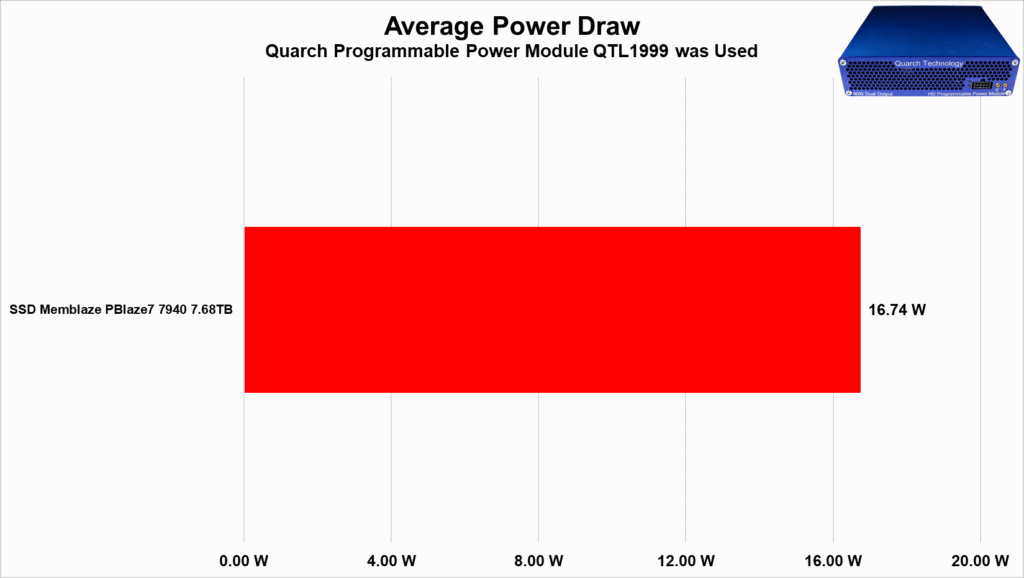

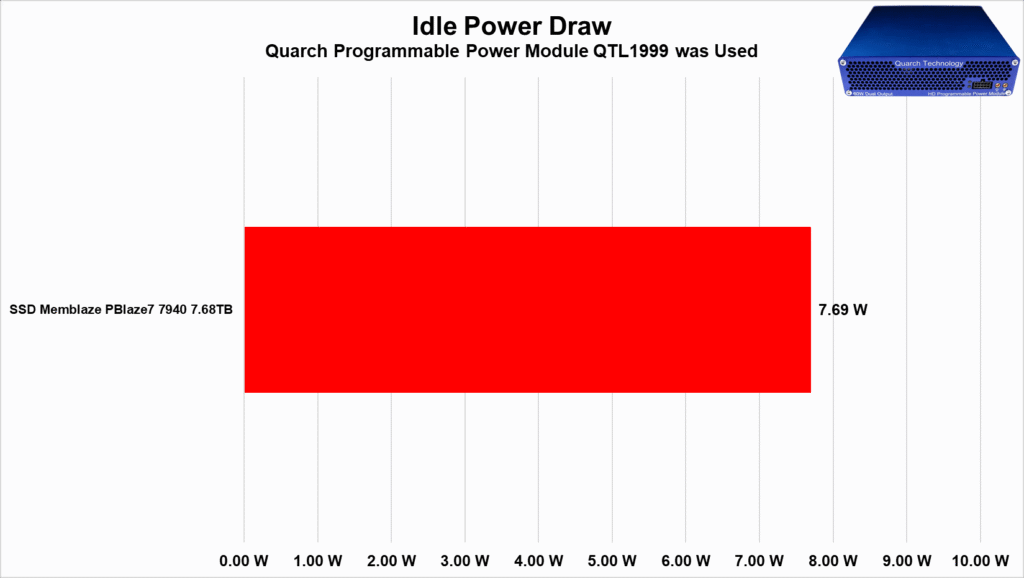

POWER DRAW

SSDs, like many other components in our system, have a certain power consumption. The most efficient ones can perform tasks that have been requested quickly and with relatively low power consumption, allowing them to return to their idle power states, where they tend to consume less energy.

In this part of the analysis, we will use the Quarch Programmable Power Module sent to us by Quarch Solutions (pictured above) to conduct these tests and determine how efficient the SSD is. In this methodology, three tests will be conducted: the SSD’s maximum power consumption, an average power consumption in practical and casual scenarios, and power consumption in idle mode.

As for it’s max power draw, we noticed that in the worst case scenario of our benchmark suite the drive did manage to stay below what the manufacturer claimed of 25W which was a good result.

In a typical server activity scenario, it shows an average power consumption of around 16.7W, which is quite reasonable for an SSD in this category.

Lastly, and most importantly, the Idle test, which represents the scenario where the vast majority of SSDs are during daily usage. We shouldn’t be surprised because even though 7.69W for an M.2 SSD is a relatively high “maximum” power consumption, an enterprise data center SSD like this tends to have significantly higher power consumption due to its robust controllers, many more NAND Flash dies, DRAM cache, numerous capacitors, and components for VRM (Voltage Regulator Module), and Power Loss Protection circuitry.

That means it showed satisfactory performance but was slightly above the manufacturer’s stated 5W figure.

CONCLUSION

Taking all of this into account, is it really worth investing in this SSD?

This SSD is clearly designed for the corporate sector and datacenters, not for consumers. Our analysis confirms that it offers exceptional performance for companies seeking high-performance SSDs. Now, let’s delve into its strengths and weaknesses.

ADVANTAGES

- Excellent sequential speeds, exceeding 14,000 MB/s.

- Random speeds, in some scenarios depending on the workload, can exceed 2.18 million IOPs.

- Excellent latencies depending on the workload.

- The SSD performs well in high queue depth (QD) Datacenter scenarios.

- The SSD features excellent internal construction with a great controller and high-quality NAND flash.

- The durability of this SSD appears to be on par with other products based on these NAND flash chips.

- It offers various form factors suitable for servers, including U.2, AIC HHHL, E1.S, and E3.S.

- It doesn’t suffer from thermal throttling, but the SSD does heat up under heavy or prolonged workloads.

- It supports AES-256 bit encryption and other enterprise features.

- Reasonable power consumption at idle for an SSD of this caliber.

- SSD with excellent energy efficiency.

DISADVANTAGES

- MSRP not informed

- Doesn’t provide SSD management software.

- Warranty of 5 years, but currently only available in China

Really amazing review! Congrats!

Love it bro!

@Alexandre Thank you!